Armada

Armada is an open-source, full-stack application that simplifies the process of configuring and deploying containerized development environments in the cloud.

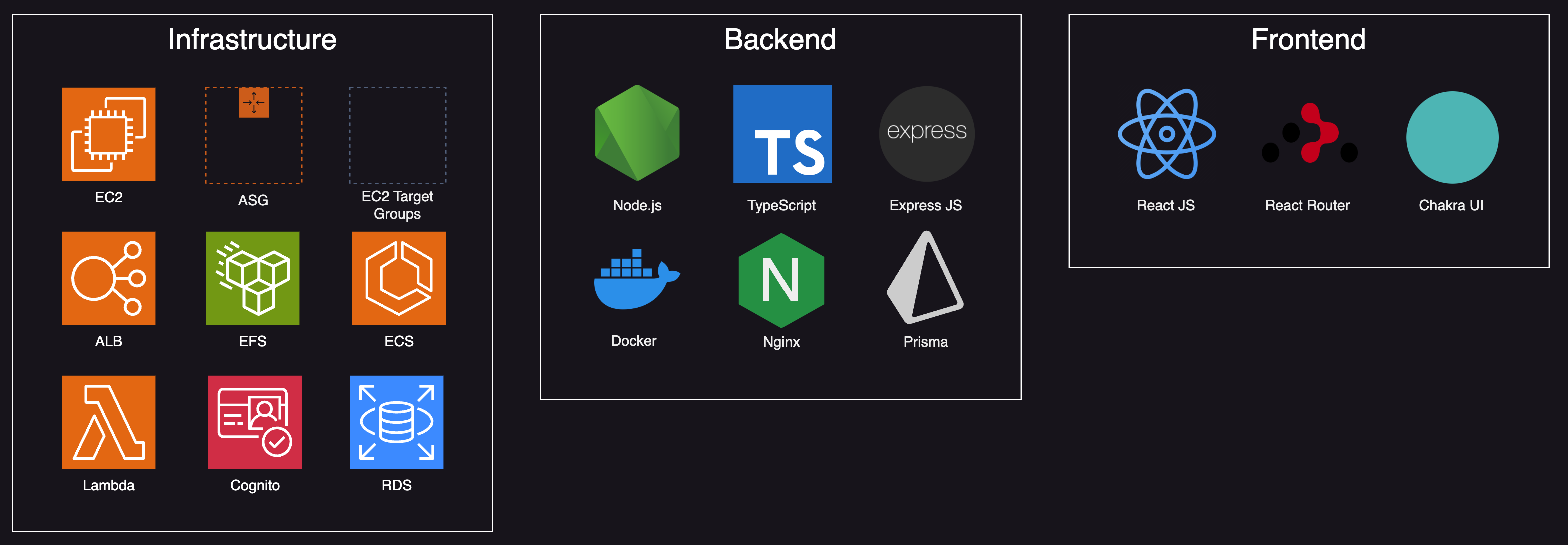

Tech Stack

Node.jsTypeScriptExpressDockerNginxPrisma-ORMAWS-Application-Load-BalancerAWS-EC2-Target-GroupsAWS-Auto-Scaling-GroupsAWS-EC2-InstancesAWS-Elastic-Container-ServiceAWS-LambdaAWS-Relational-Database-ServiceAWS-CognitoReactReact-Router-DOMChakra-UIAWS-Amplify-Cognito

GitHub

Armada - Streamlining Development Environments

1. Introduction

Armada is an open-source, full-stack application that simplifies the process of configuring and deploying containerized development environments in the cloud.

Armada addresses challenges in configuring development environments for students and instructors. Its approach utilizes containerization, cloud infrastructure, and user-friendly interfaces to create a seamless and scalable solution.

2. Problem Statement

Configuration, dependency management and resource allocation for development environments poses a multitude of challenges for both students and instructors. Students struggle with complex environment setups, dependency management and hardware limitations, while instructors grapple with diverse hardware configurations, operating systems, software versions and student experience levels.

Configuration, dependency management and resource allocation for development environments poses a multitude of challenges for both students and instructors. Students struggle with complex environment setups, dependency management and hardware limitations, while instructors grapple with diverse hardware configurations, operating systems, software versions and student experience levels.

3. Project Objectives

To overcome these challenges, Armada was developed with the following goals in mind:

- Create user-friendly development environments

- Enable efficient management and deployment for instructors

- Ensure scalability to ensure flunctuating demand

- Minimize costs for instructors

4. Key Stakeholders

Armada is fundamentally designed for education, aiming to serve a wide spectrum of instructors and students by providing powerful features without sacrificing ease of use. Specifically, it's tailored for Computer Science and Programming instructors who seek a self-hosted, open-source tool they can adapt to their unique requirements.

5. Tech Stack

6. Milestones

Equipped with our objectives in mind, we identified eight essential milestones to effectively create, provision and manage development environments that both instructors and students could utilize. These include:

- Containerizing workspaces

- Provisioning cloud infrastructure

- Deploying single workspaces

- Deploying multiple workspaces

- Implementing dedicated URLs for workspace access

- Ensuring data persistence

- Establishing relationships between user entities

- Enabling user authentication

6.1 Containerizing workspaces

GitPod vs Code-Server

When we were developing Armada, our primary challenge was to design a coding environment accessible via a URL. We evaluated two docker images: Coder and GitPod. Both could have served as the foundation for Armada workspaces, but each had its own trade-offs. GitPod provided more features and integrations; however, it was approximately 7 GB in size with a typical startup time of 5-10 minutes. On the other hand, Coder's container (coder-server) was less than 1 GB, met all our functional requirements, and boasted a load time of under 20 seconds. Given that one of our main objectives was to minimize costs, we selected the Coder image as our base. This decision allowed us to provision more instances of the workspace container per server compared to what would have been possible with the GitPod image.

6.2 Provisioning Cloud Infrastructure

Cloud Agnostic vs Cloud-Native

To kickstart the development Armada, we faced a pivotal architectural choice: should we go cloud-native or cloud-agnostic? This decision would directly impact the application's reliability, scalability and maintenance costs.

A cloud-native approach lets developers bypass the undifferentiated heavy lifting by shifting the operational management to the cloud provider. This means they can concentrate on the app's core features without redoing generic tasks. However, a major drawback is vendor lock-in: developers get tied to a specific provider's framework, making it challenging to switch to another cloud platform without significant changes.

A cloud-agnostic approach aims to create applications easily deployable across multiple cloud providers, helping to sidestep vendor lock-in. While this might suggest a one-size-fits-all solution, the truth is that adapting code for each provider's environment requires effort. Furthermore, developers might find themselves handling tasks like applying security patches, updating virtual machine OS, and overseeing backups — a direction that contradicts one of our core objectives.

After weighing pros and cons, we chose a cloud-native strategy, selecting Amazon Web Services (AWS) to host our application. AWS offered convenient services for development, minimizing system administration. Its extensive tooling ecosystem, encompassing CLI, SDK, and CDK, improved our prototyping and iteration capabilities, providing a cohesive developer experience.

6.3 Deploying Single Workspaces

CDK vs CloudFormation

Up until this point, we've largely relied on click-ops via the AWS Console. Recognizing its scalability limitations, we turned to Infrastructure-as-Code (IaC) to automate provisioning.

Among options such as CloudFormation and Terraform, the AWS Cloud Development Kit (CDK) stood out. It facilitates the setup of cloud resources using familiar languages, like TypeScript, promoting simplicity and uniformity. This also meant we could use TypeScript consistently across our entire stack. While Terraform employs HCL and CloudFormation utilizes JSON/YAML, CDK smoothly integrates with AWS, converting configurations into CloudFormation templates.

Proof of Concept with EC2, Nginx Proxy, and Code-Server

On our task roadmap, our next step was to set up a developer environment in the cloud. To understand how a coding environment would perform in real-time, we began with a basic prototype: a single code-server container on an EC2 instance. We did this by using an Nginx proxy to link to the code-server environment. Though a straightforward achievement, it validated our application's viability and set the stage for a scalable solution.

K8s vs Docker Swarm vs ECS

After carefully calculating tradeoffs, we chose ECS. ECS offers deep integration with the AWS ecosystem, simplifying setup and management for those familiar with AWS services. Compared to Kubernetes, ECS is often easier to use and manage, making it a lightweight alternative for projects not requiring Kubernetes' extensive features. While Docker Swarm is closely integrated with Docker tools, it doesn't match the feature set and popularity of ECS or Kubernetes.

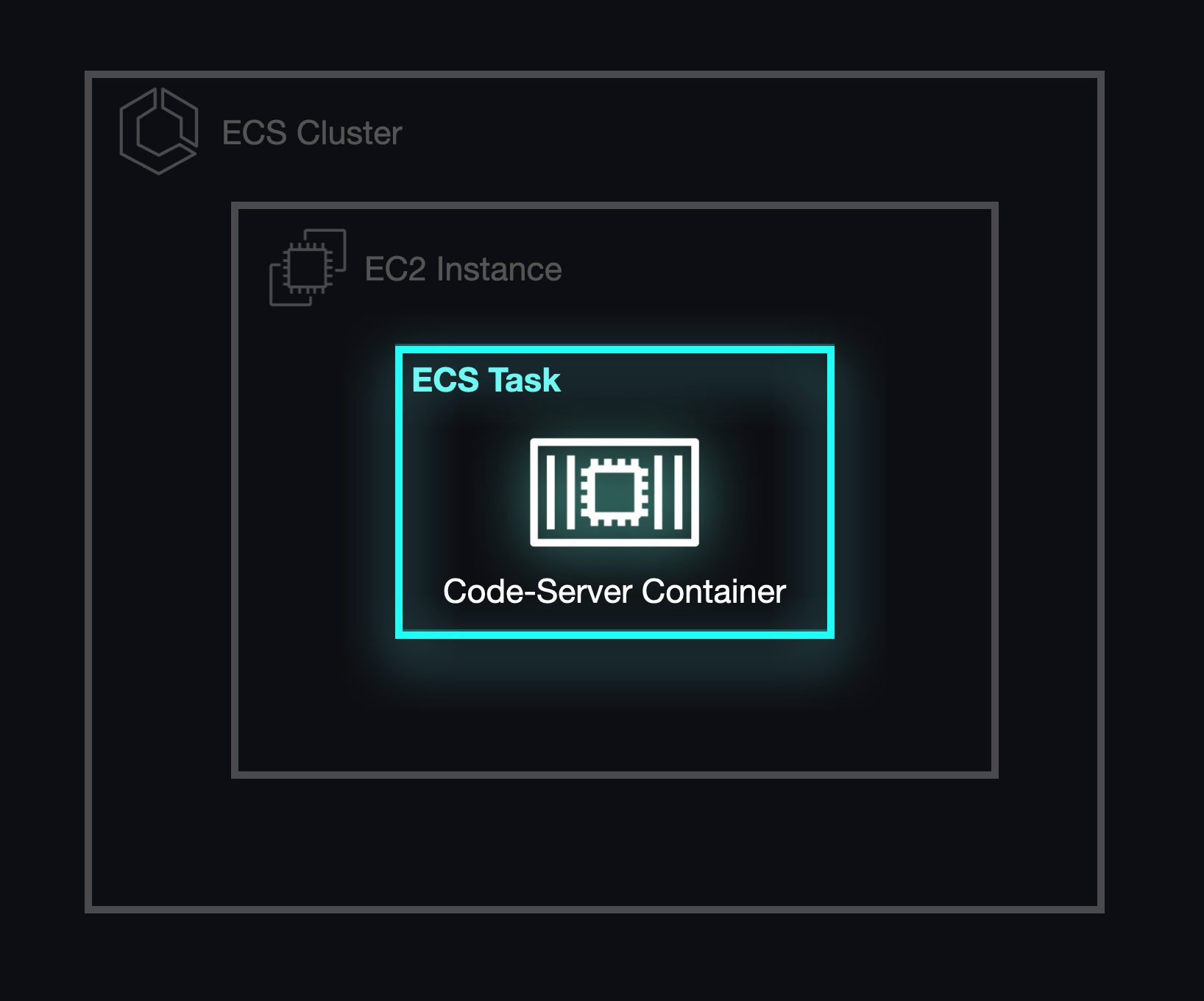

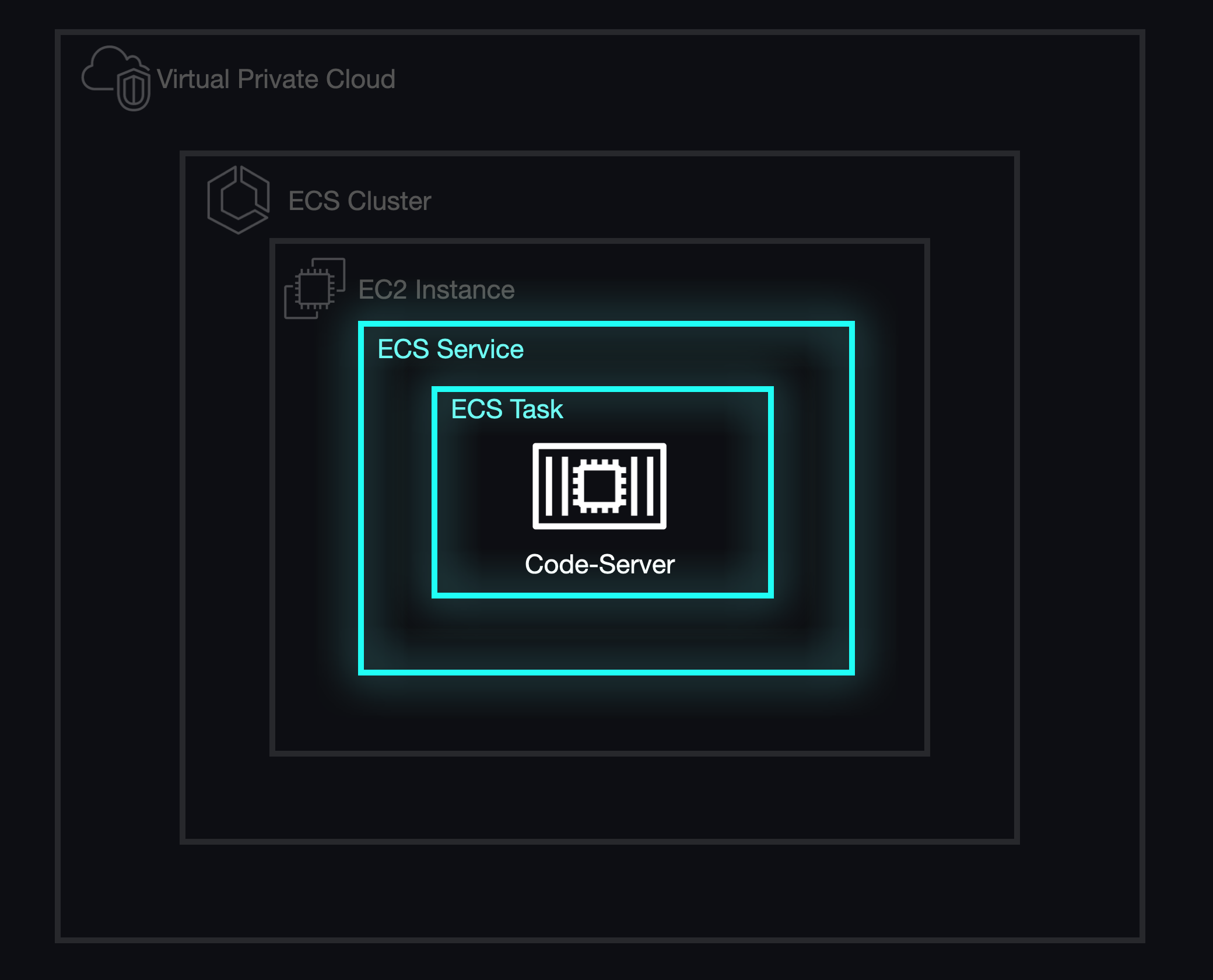

Deploying Single ECS Tasks

We initially adopted the ECS ecosystem by deploying an ECS Task, which allowed us to run and provide online workspace environments. Yet, this method didn't offer granular control over container health and monitoring. For instance, if an ECS task malfunctioned, it wouldn't auto-restart. As a result, we explored solutions to achieve greater reliability and scalability for our containers. Also, tasks did not offer an option to configure networking, which would become extremely important when we set out to match the correct task to the correct student workspace.

We initially adopted the ECS ecosystem by deploying an ECS Task, which allowed us to run and provide online workspace environments. Yet, this method didn't offer granular control over container health and monitoring. For instance, if an ECS task malfunctioned, it wouldn't auto-restart. As a result, we explored solutions to achieve greater reliability and scalability for our containers. Also, tasks did not offer an option to configure networking, which would become extremely important when we set out to match the correct task to the correct student workspace.

6.4 Deploying Multiple Workspaces

Deploying ECS Services

To deliver our main feature, which was to provision an arbitrary number of workspaces, we required a system that could both manage the health and lifecycle of our developer environments and scale the infrastructure behind these containers. Eventually, we determined that ECS Services were necessary to achieve our objectives.

To deliver our main feature, which was to provision an arbitrary number of workspaces, we required a system that could both manage the health and lifecycle of our developer environments and scale the infrastructure behind these containers. Eventually, we determined that ECS Services were necessary to achieve our objectives.

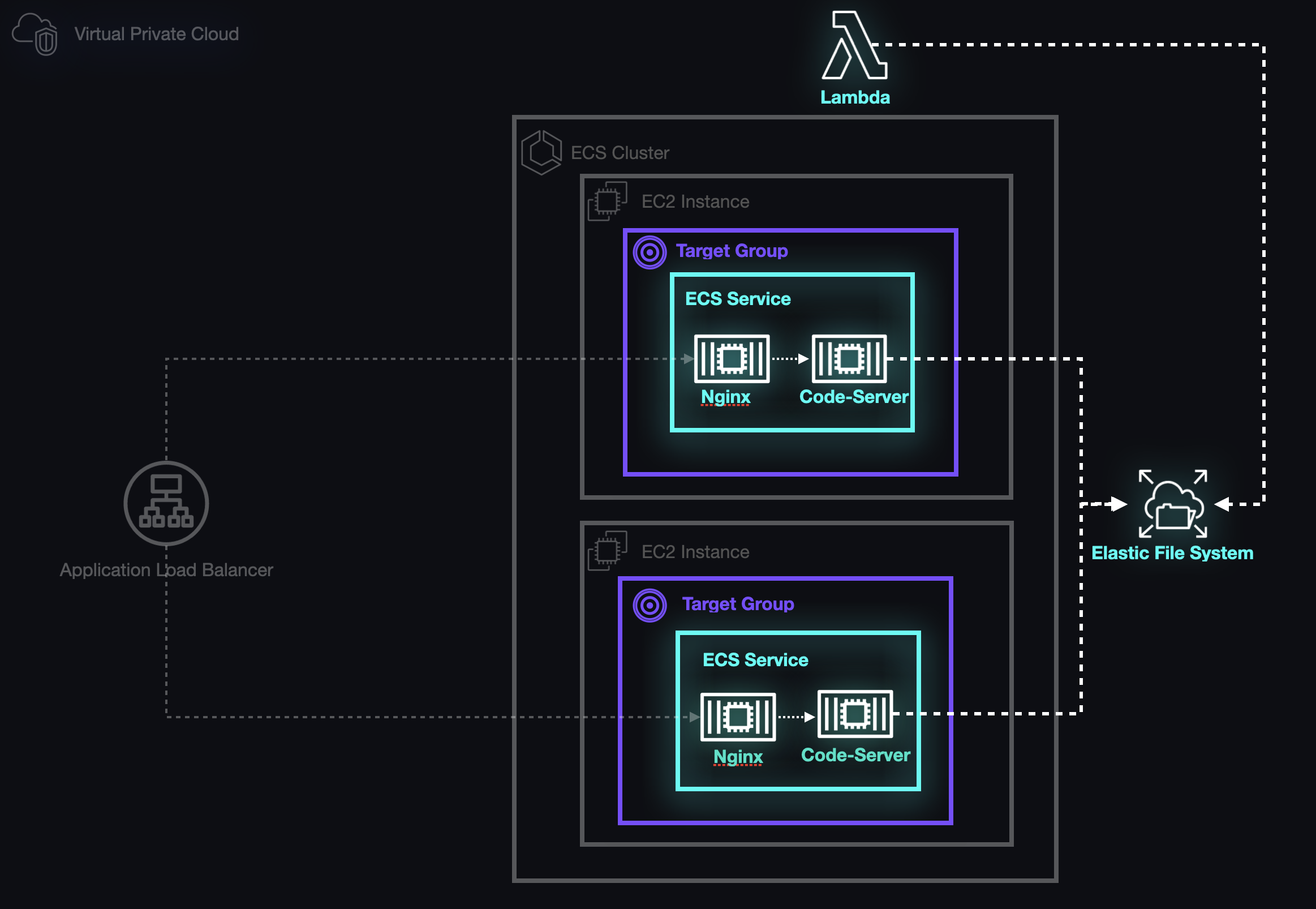

ECS Services are designed to autonomously monitor and manage containers. They perform "health checks" by accessing a predefined URL and anticipate a 200 response to confirm the service is functioning properly. If any container is flagged as "unhealthy," ECS swiftly substitutes it based on the associated service's task definition.

For Armada, we had a simple requirement: a single task for each student's workspace within a service. A bonus with this arrangement was the ability to start or stop individual workspaces simply by adjusting the task count for a particular service from zero to one, and vice-versa.

Cluster Auto-Scaling

After figuring out how to deploy multiple workspaces using services, we encountered a new challenge. Although ECS handles the services and tasks, the underlying Docker containers run on EC2 instances with constrained resources, most notably memory (RAM). To address this, we turned to ECS capacity providers.

After figuring out how to deploy multiple workspaces using services, we encountered a new challenge. Although ECS handles the services and tasks, the underlying Docker containers run on EC2 instances with constrained resources, most notably memory (RAM). To address this, we turned to ECS capacity providers.

ECS capacity providers essentially serve as an abstraction layer over EC2 Auto Scaling Groups. By using them, we were able to harness their tight integration with EC2 Auto Scaling, enabling us to specify how our ECS tasks influenced the scaling of the underlying EC2 instances. This approach not only gave us finer control over our infrastructure but also ensured that we always had the necessary resources for our tasks, despite the limitations of individual instances.

Service Auto-Scaling

Now that workspaces were structured as tasks supervised by ECS services, we could effortlessly activate or deactivate them and address unexpected issues that arose within the workspaces. Furthermore, we could fine-tune the scaling of EC2 instances to align perfectly with the demands of the active workspaces, ensuring no unnecessary overhead.

Now that workspaces were structured as tasks supervised by ECS services, we could effortlessly activate or deactivate them and address unexpected issues that arose within the workspaces. Furthermore, we could fine-tune the scaling of EC2 instances to align perfectly with the demands of the active workspaces, ensuring no unnecessary overhead.

6.5 Implementing Dedicated URLS for Workspace Access

Static vs Dynamic Port Mapping

So far, we've been provisioning separate EC2 instances for each service running our workspaces by hardcoding the host port in our task definitions. This wasn't efficient since one EC2 instance has enough resources to manage multiple workspaces. This posed a challenge because when sharing an instance with multiple services, we had to ensure no two workspaces used the same host port. This port conflict is a known challenge in ECS.

So far, we've been provisioning separate EC2 instances for each service running our workspaces by hardcoding the host port in our task definitions. This wasn't efficient since one EC2 instance has enough resources to manage multiple workspaces. This posed a challenge because when sharing an instance with multiple services, we had to ensure no two workspaces used the same host port. This port conflict is a known challenge in ECS.

When setting up a container, we decide how the container's ports map to those on our main computer (the host operating system). We have two options:

- Assign (hardcode) a specific port for the container, known as static port mapping.

- Let the host system automatically pick an available port, called dynamic port mapping.

Elastic Load Balancing (ALB)

After understanding static and dynamic port mapping, we landed on a solution via EC2 Elastic Load Balancing.

We placed an Application Load Balancer (ALB) in front of the ECS cluster to act as the primary access point for our application.

After understanding static and dynamic port mapping, we landed on a solution via EC2 Elastic Load Balancing.

We placed an Application Load Balancer (ALB) in front of the ECS cluster to act as the primary access point for our application.

The Application load balancer supports dynamic host port mapping by default, which would allow us to deploy tasks on the same EC2 instance. To enable dynamic port mapping, we simply set the host port in the task definition of the service to 0. This instructs the ALB to automatically select a random port from the ephemeral port range available on the EC2 instance hosting our containers.

Round Robin Routing

In a typical setup, a load balancer distributes traffic evenly across multiple servers. However, for Armada, each workspace is dedicated to a specific student, making them stateful. The default round-robin routing of Application Load Balancers (ALBs) posed a problem: each new request was sent to a random task. As a result, students would end up connected to random workspaces rather than their own, which was not the desired outcome.

In a typical setup, a load balancer distributes traffic evenly across multiple servers. However, for Armada, each workspace is dedicated to a specific student, making them stateful. The default round-robin routing of Application Load Balancers (ALBs) posed a problem: each new request was sent to a random task. As a result, students would end up connected to random workspaces rather than their own, which was not the desired outcome.

Path-based routing with Target Groups

To solve the issue of directing students to their specific, stateful workspaces, we used target groups in our Application Load Balancer (ALB). Each workspace was assigned its own target group, which allowed us to route traffic based on unique URLs. We employed a consistent naming convention to create these URLs and added corresponding rules to the ALB. By combining this setup with dynamic port mapping and Nginx containers for URL rewriting, we were able to give each workspace a unique path, allowing students to access their dedicated environments as if they were standalone applications.

To solve the issue of directing students to their specific, stateful workspaces, we used target groups in our Application Load Balancer (ALB). Each workspace was assigned its own target group, which allowed us to route traffic based on unique URLs. We employed a consistent naming convention to create these URLs and added corresponding rules to the ALB. By combining this setup with dynamic port mapping and Nginx containers for URL rewriting, we were able to give each workspace a unique path, allowing students to access their dedicated environments as if they were standalone applications.

6.6 Ensuring Workspace Data Persistence

Armada addressed data persistence challenges by utilizing Amazon's Elastic File System (EFS). EFS offered scalable, on-demand file systems that preserved user data between workspace sessions, overcoming limitations of other approaches.

EBS vs EFS

To ensure that each workspace session remains consistent, we explored two potential storage solutions: one using a combination of EBS and S3, and another using EFS. While using EBS appeared to be a more cost-effective option, it quickly became clear that it would not be the best solution for our needs. EBS volumes are directly mounted to virtual machines, requiring us to programmatically identify available mount points on each host and communicate that information to the new workspace container. Implementing this would involve creating numerous custom scripts to coordinate these actions. We concluded that this approach would be too complex and thus not a viable storage solution and set our eyes on EFS.

To ensure that each workspace session remains consistent, we explored two potential storage solutions: one using a combination of EBS and S3, and another using EFS. While using EBS appeared to be a more cost-effective option, it quickly became clear that it would not be the best solution for our needs. EBS volumes are directly mounted to virtual machines, requiring us to programmatically identify available mount points on each host and communicate that information to the new workspace container. Implementing this would involve creating numerous custom scripts to coordinate these actions. We concluded that this approach would be too complex and thus not a viable storage solution and set our eyes on EFS.

EFS or Elastic File System is a serverless cloud storage service that automatically scales its file system based on application needs. Unlike EBS, which requires mounting to a server, EFS integrates directly with an operating system's file system. It auto-adjusts its storage capacity, eliminating manual management. By monitoring specific directories and using serverless functions, EFS ensures data persistence, regardless of the container or host state.

EFS or Elastic File System is a serverless cloud storage service that automatically scales its file system based on application needs. Unlike EBS, which requires mounting to a server, EFS integrates directly with an operating system's file system. It auto-adjusts its storage capacity, eliminating manual management. By monitoring specific directories and using serverless functions, EFS ensures data persistence, regardless of the container or host state.

To effectively integrate EFS into Armada, two crucial steps had to be taken. First, the folder or directory you intend to use must already exist on the EFS volume before mounting it to any container. Second, the task definition for each container must include a designated mount point for the EFS volume. This allows you to specify where the directory should be located within the container once it's up and running.

To effectively integrate EFS into Armada, two crucial steps had to be taken. First, the folder or directory you intend to use must already exist on the EFS volume before mounting it to any container. Second, the task definition for each container must include a designated mount point for the EFS volume. This allows you to specify where the directory should be located within the container once it's up and running.

To address this challenge, we employed AWS Lambda functions to automatically generate uniquely named directories. These directories would house the EFS volumes specifically designated for each individual workspace.

To successfully use Lambda and EFS for managing our workspaces, we followed these steps in order:

- Create a task definition that includes a volume pointing to a uniquely named directory on EFS.

- Use Lambda to generate this specific directory within EFS, adhering to a naming convention like

cohortName-courseName-studentName. - Launch the workspace task, ensuring that the EFS volume is attached to a directory in the container named

"home/coder".

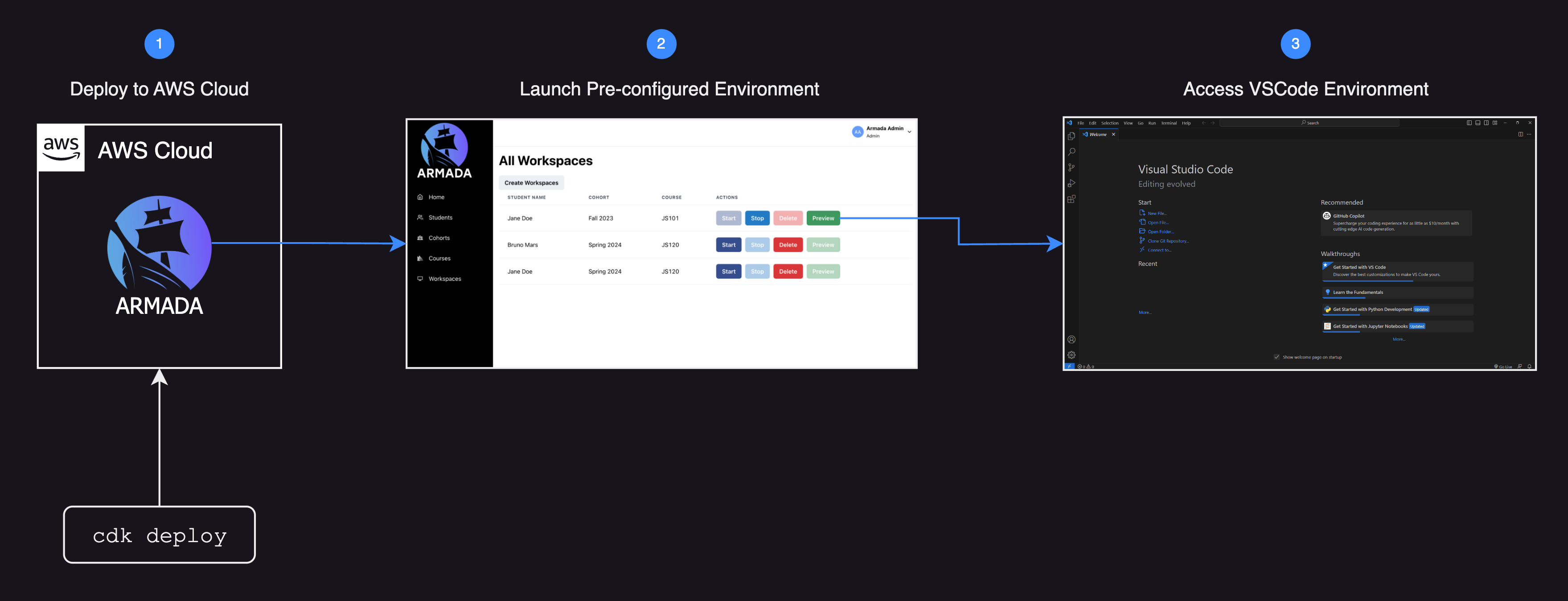

The Armada Application

To give instructors the capability to initiate student workspaces, and to allow students to access those workspaces, the Armada team developed a TypeScript-based full-stack application. This application performs all the required steps to set up the resources that users need.

To give instructors the capability to initiate student workspaces, and to allow students to access those workspaces, the Armada team developed a TypeScript-based full-stack application. This application performs all the required steps to set up the resources that users need.

For example: when an instructor sets up a workspace for a specific student, the Armada software does the following automatically, using the SDK:

- Creates a task definition specific to the student, outlining the container's (workspace) configuration.

- Creates a target group for the student's workspace and attaches a rule to the listener on the ALB, allowing the workspace to receive traffic once it's operational.

- Creates a service specific to the student on ECS allowing its availability and health to be managed and monitored automatically.

- Stores information relevant to the creation and organization of data.

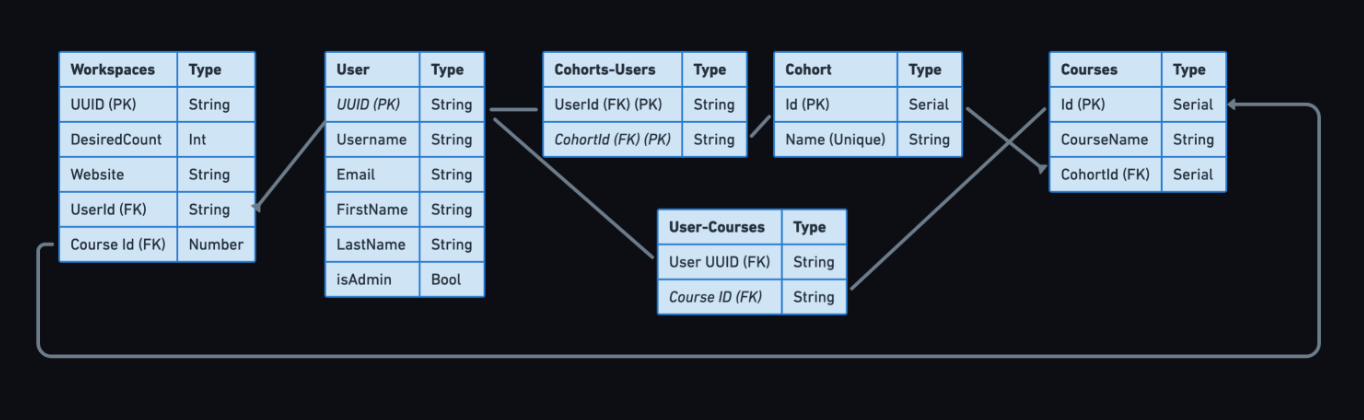

6.7 Relational Database Design

Self-hosted vs RDS

At first, we thought about running PostgreSQL on our own EC2 instance. However, this approach would place the burden of managing the instance, applying security updates, and conducting database backups on the instructor launching Armada. This contradicted one of our key objectives, which was to minimize manual management tasks.

We concluded that the relatively low cost of using Amazon RDS was worth it for the benefits it provided. Opting for this managed service simplified the setup process and gave us a more robust system for production use. Additionally, RDS automatically backs up our data and will provision a replacement for our application should it crash, enhancing system reliability.

The Admin Node

The admin node allowed us to write a shell script that would connect to the RDS instance and initialize our database schema.

6.8 Enabling User Authentication

Authentication with Cognito

Armada incorporated Amazon Cognito, a managed authentication solution, to ensure secure user access and differentiate roles. Cognito facilitated user management, authentication, and authorization while integrating with the broader Armada ecosystem.

7. Team

Armada was built in collaboration with 3 other talented developers.

8. Conclusion

Armada effectively simplified the challenge of setting up development environments, providing a more accessible and manageable solution for both students and instructors. Utilizing container technology, cloud-based resources, and intuitive user interfaces, Armada offers a way for users to effortlessly access and handle their individual workspaces. Its innovative design and successful realization of critical objectives make it a useful proof-of-concept for educational organizations and tech-savvy educators looking for efficient, self-managed development environments.